Publications

As part of my graduate studies, I've worked with my peers to conduct research studies across several projects. The projects listed in this page have been formally accepted at academic conferences. Check back to see future accepted works!

Journal

Working in Extended Reality in the Wild: Worker and Bystander Experiences of XR Virtual Displays in Public Real-World Settings

Although access to sufficient screen space is crucial to knowledge work, workers often find themselves with limited access to display infrastructure in remote or public settings. While virtual displays can be used to extend the available screen space through extended reality (XR) head-worn displays (HWD), we must better understand the implications of working with them in public settings from both users' and bystanders' viewpoints. To this end, we conducted two user studies. We first explored the usage of a hybrid AR display across real-world settings and tasks. We focused on how users take advantage of virtual displays and what social and environmental factors impact their usage of the system. A second study investigated the differences between working with a laptop, an AR system, or a VR system in public. We focused on a single location and participants performed a predefined task to enable direct comparisons between the conditions while also gathering data from bystanders. The combined results suggest a positive acceptance of XR technology in public settings and show that virtual displays can be used to accompany existing devices. We highlighted some environmental and social factors. We saw that previous XR experience and personality can influence how people perceive the use of XR in public. In addition, we confirmed that using XR in public still makes users stand out and that bystanders are curious about the devices, yet have no clear understanding of how they can be used.

Gestures vs. Emojis: Comparing Non-Verbal Reaction Visualizations for Immersive Collaboration

Collaborative virtual environments afford new capabilities in telepresence applications, allowing participants to co-inhabit an environment to interact while being embodied via avatars. However, shared content within these environments often takes away the attention of collaborators from observing the non-verbal cues conveyed by their peers, resulting in less effective communication. Exaggerated gestures, abstract visuals, as well as a combination of the two, have the potential to improve the effectiveness of communication within these environments in comparison to familiar, natural non-verbal visualizations. We designed and conducted a user study where we evaluated the impact of these different non-verbal visualizations on users’ identification time, understanding, and perception. We found that exaggerated gestures generally perform better than non-exaggerated gestures, abstract visuals are an effective means to convey intentional reactions, and the combination of gestures with abstract visuals provides some benefits compared to their standalone counterparts.

Conference

Exploring Bichronous Collaboration in Virtual Environments

Virtual environments (VEs) empower geographically distributed teams to collaborate on a shared project regardless of time. Existing research has separately investigated collaborations within these VEs at the same time (i.e., synchronous) or different times (i.e., asynchronous). In this work, we highlight the often-overlooked concept of bichronous collaboration and define it as the seamless integration of archived information during a real-time collaborative session. We revisit the time-space matrix of computer-supported cooperative work (CSCW) and reclassify the time dimension as a continuum. We describe a system that empowers collaboration across the temporal states of the time continuum within a VE during remote work. We conducted a user study using the system to discover how the bichronous temporal state impacts the user experience during a collaborative inspection. Findings indicate that the bichronous temporal state is beneficial to collaborative activities for information processing, but has drawbacks such as changed interaction and positioning behaviors in the VE.

Investigating the Influence of Playback Interactivity during Guided Tours for Asynchronous Collaboration in Virtual Reality

Collaborative virtual environments allow workers to contribute to team projects across space and time. While much research has closely examined the problem of working in different spaces at the same time, few have investigated the best practices for collaborating in those spaces at different times aside from textual and auditory annotations. We designed a system that allows experts to record a tour inside a virtual inspection space, preserving knowledge and providing later observers with insights through a 3D playback of the expert's inspection. We also created several interactions to ensure that observers are tracking the tour and remaining engaged. We conducted a user study to evaluate the influence of these interactions on an observing user's information recall and user experience. Findings indicate that independent viewpoint control during a tour enhances the user experience compared to fully passive playback and that additional interactivity can improve auditory and spatial recall of key information conveyed during the tour.

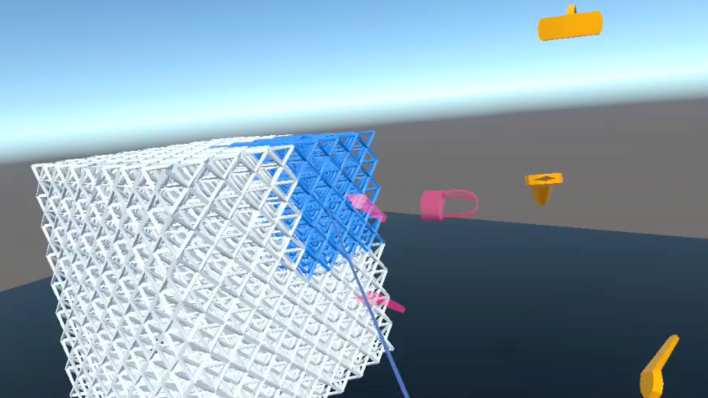

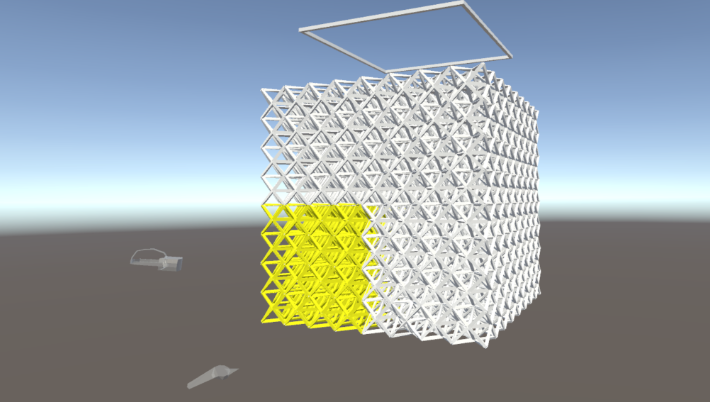

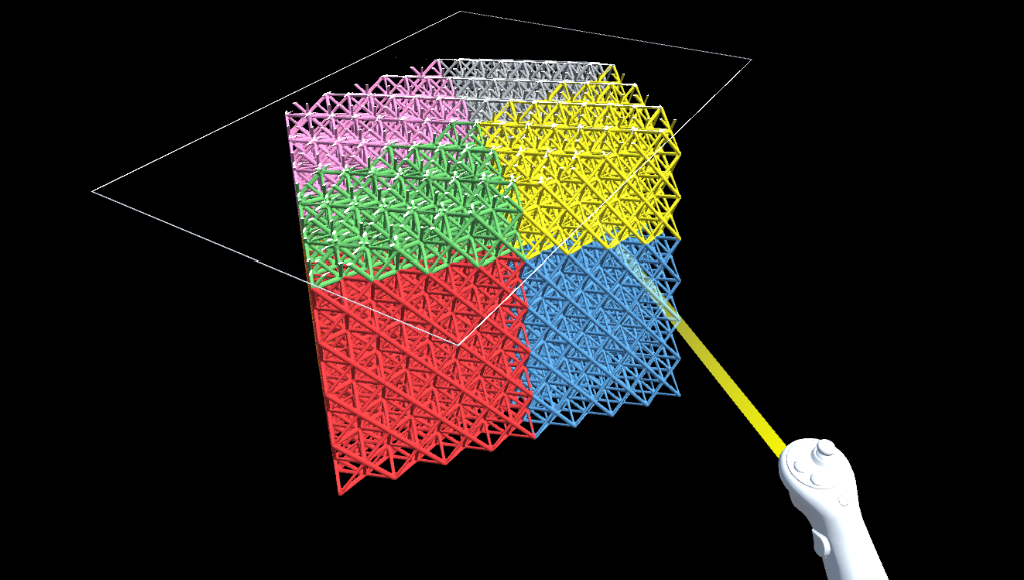

Exploring Multiscale Navigation of Homogeneous and Dense Objects with Progressive Refinement in Virtual Reality

Locating small features in a large, dense object in virtual reality (VR) poses a significant interaction challenge. While existing multiscale techniques support transitions between various levels of scale, they are not focused on handling dense, homogeneous objects with hidden features. We propose a novel approach that applies the concept of progressive refinement to VR navigation, enabling focused inspections. We conducted a user study where we varied two independent variables in our design, navigation style (STRUCTURED vs. UNSTRUCTURED) and display mode (SELECTION vs. EVERYTHING), to better understand their effects on efficiency and awareness during multiscale navigation. Our results showed that unstructured navigation can be faster than structured and that displaying only the selection can be faster than displaying the entire object. However, using an everything display mode can support better location awareness and object understanding.

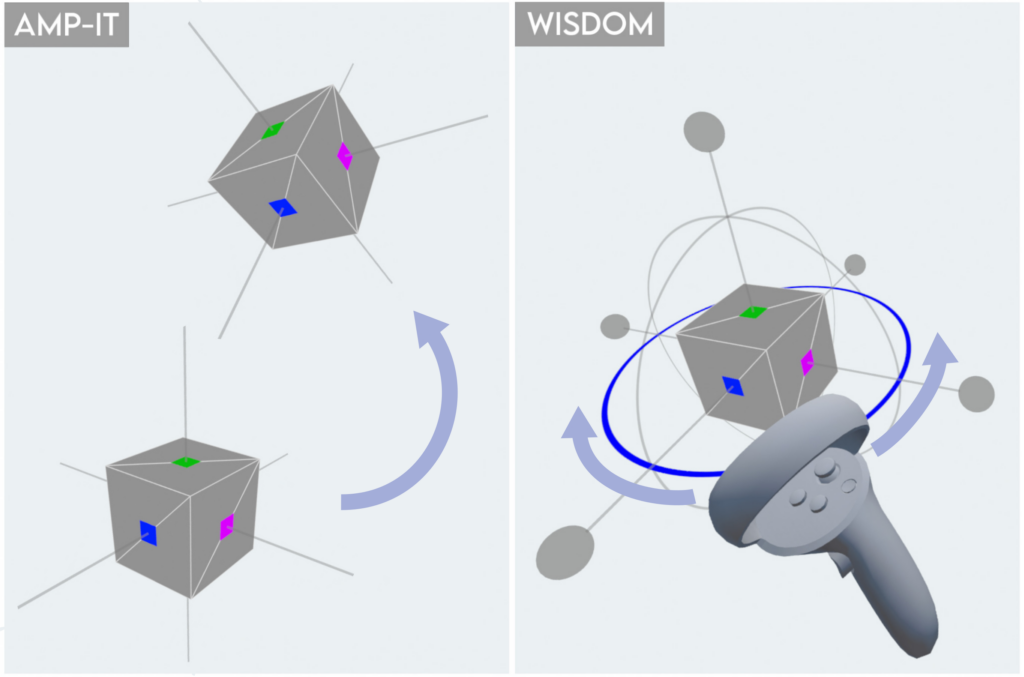

AMP-IT and WISDOM: Improving 3D Manipulation for High-Precision Tasks in Virtual Reality

Precise 3D manipulation in virtual reality (VR) is essential for effectively aligning virtual objects. However, state-of-the-art VR manipulation techniques have limitations when high levels of precision are required, including the unnaturalness caused by scaled rotations and the increase in time due to degree-of-freedom (DoF) separation in complex tasks. We designed two novel techniques to address these issues: AMP-IT, which offers direct manipulation with an adaptive scaled mapping for implicit DoF separation, and WISDOM, which offers a combination of Simple Virtual Hand and scaled indirect manipulation with explicit DoF separation. We compared these two techniques against baseline and state-of-the-art manipulation techniques in a controlled experiment. Results indicate that WISDOM and AMP-IT have significant advantages over best-practice techniques regarding task performance, usability, and user preference.

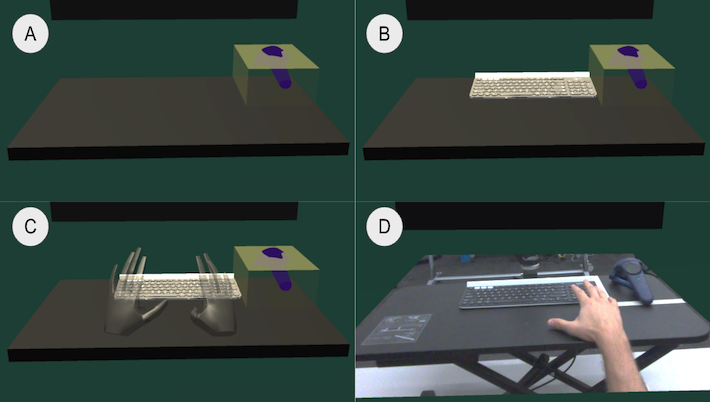

Exploring the Impact of Visual Information on Intermittent Typing in Virtual Reality

For touch typists, using a physical keyboard ensures optimal text entry task performance in immersive virtual environments. However, successful typing depends on the user’s ability to accurately position their hands on the keyboard after performing other, non-keyboard tasks. Finding the correct hand position depends on sensory feedback, including visual information. We designed and conducted a user study where we investigated the impact of visual representations of the keyboard and users' hands on the time required to place hands on the homing bars of a keyboard after performing other tasks. We found that this keyboard homing time decreased as the fidelity of visual representations of the keyboard and hands increased, with a video pass-through condition providing the best performance. We discuss additional impacts of visual representations of a user's hands and the keyboard on typing performance and user experience in virtual reality.

Abstracts & Workshops

Planet Purifiers: A Collaborative Immersive Experience Proposing New Modifications to HOMER and Fishing Reel Interaction Techniques

This paper presents our solution to the 2025 3DUI Contest challenge. We aimed to develop a collaborative, immersive experience that raises awareness about trash pollution in natural landscapes while enhancing traditional interaction techniques in virtual environments. To achieve these objectives, we created an engaging multiplayer game where one user collects harmful pollutants while the other user provides medication to impacted wildlife using enhancements to traditional interaction techniques: HOMER and Fishing Reel. We enhanced HOMER to use a cone volume to reduce the precise aiming required by a selection raycast to provide a more efficient means to collect pollutants at large distances, coined as FLOW-MATCH. To improve the animal feed distribution to wildlife far away from the user with Fishing Reel, we created RAWR-XD, an asymmetric bi-manual technique to more conveniently adjust the reeling speed using the non-selecting wrist rotation of the user.

Exploring the Effects of Level of Control in the Initialization of Shared Whiteboarding Sessions in Collaborative Augmented Reality

We explored the effects of three methods for beginning a collaborative whiteboarding session with varying levels of user control: MANUAL, DISCRETE CHOICE, and AUTOMATIC by conducting a simulated AR study within Virtual Reality (VR). We evaluated these three conditions in a study in which two collaborators used each method to begin collaboration sessions. After establishing a session, the users worked together to complete an affinity diagramming task using the shared whiteboard. We found that the majority of participants preferred to have direct control during the initialization of a new collaboration session, despite the additional workload induced by the Manual method.

The Alchemist: A Gesture-Based 3D User Interface for Engaging Arithmetic Calculations

This paper presents our solution to the IEEE VR 2024 3DUI contest. We present The Alchemist, a VR experience tailored to aid children in practicing and mastering the four fundamental mathematical operators. In The Alchemist, players embark on a fantastical journey where they must prepare three potions to break a malevolent curse imprisoning the Gobbler kingdom. Our contributions include the development of a novel number input interface, Pinwheel, an extension of PizzaText, as well as four novel gestures, each corresponding to a distinct mathematical operator, designed to assist children in retaining practice with these operations. Preliminary tests indicate that Pinwheel and the four associated gestures facilitate the quick and efficient execution of mathematical operations.

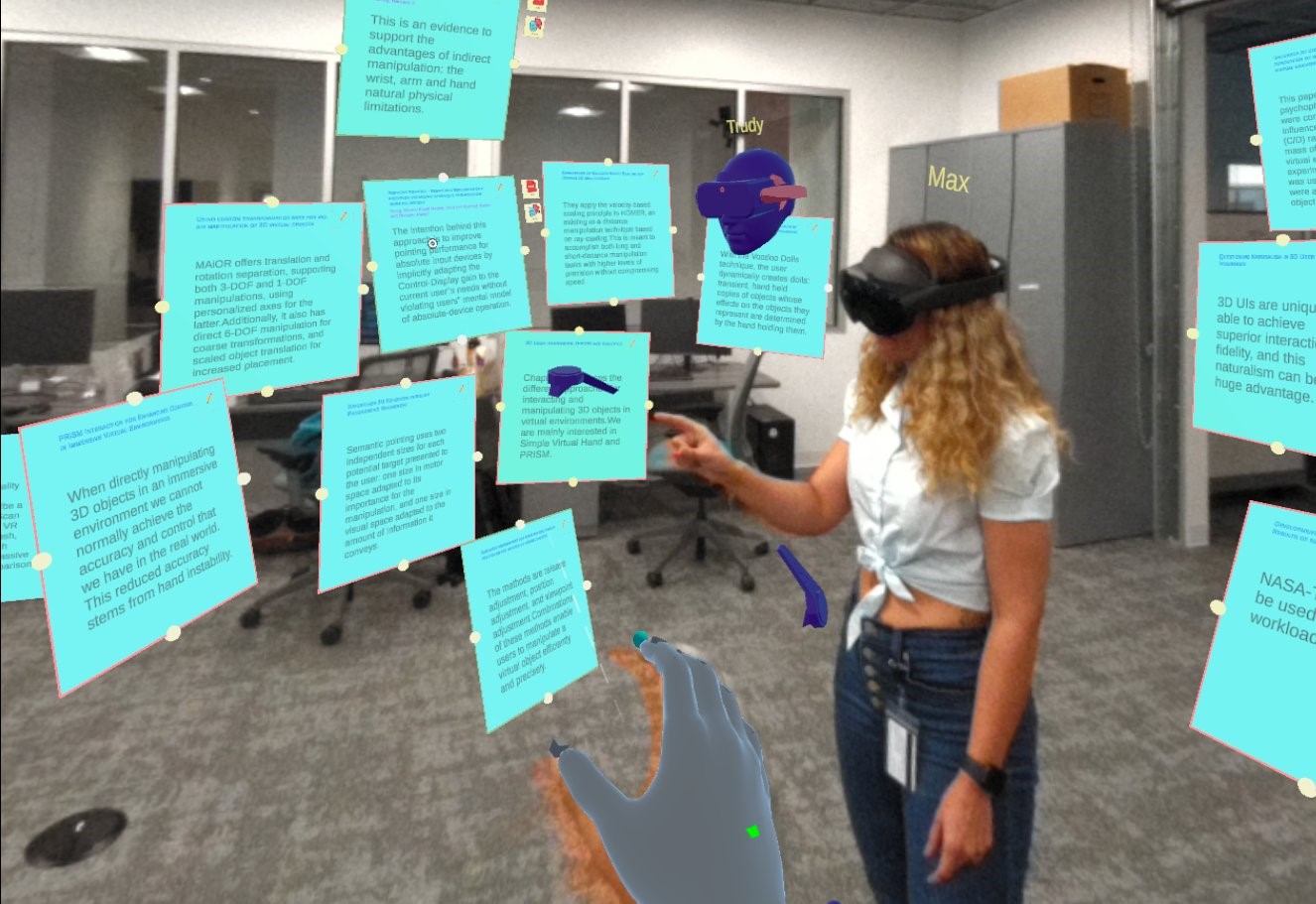

CoLT: Enhancing Collaborative Literature Review Tasks with Synchronous and Asynchronous Awareness Across the Reality-Virtuality Continuum

In the context of collaborative academic writing, while immersive technologies offer novel ways to enhance collaboration and enable efficient information exchange in a shared workspace, traditional devices such as laptops still offer better readability for longer articles. We propose the design of a hybrid cross-reality cross-device networked system that allows the users to harness the advantages of both worlds. Our system allows users to import documents from their personal computers (PC) to an immersive headset, facilitating document sharing and simultaneous collaboration with both colocated colleagues and remote colleagues. Our system also enables a user to seamlessly transition between Virtual Reality, Augmented Reality, and the traditional PC environment, all within a shared workspace. We present the real-world scenario of a global academic team conducting a comprehensive literature review, demonstrating its potential for enhancing cross-reality hybrid collaboration and productivity.

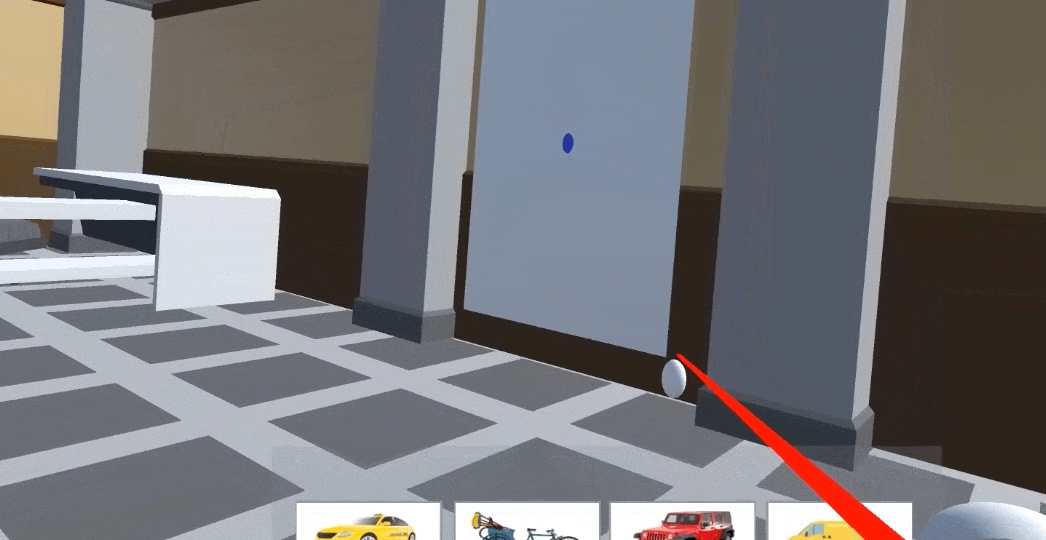

CLUE HOG: An Immersive Competitive Lock-Unlock Experience using Hook On Go-Go Technique for Authentication in the Metaverse

This paper presents our solution to the 2023 3DUI Contest challenge. Our goal was to provide an immersive VR experience to engage users in privately securing and accessing information in the Metaverse while improving authentication-related interactions inside our virtual environment. To achieve this goal, we developed an authentication method that uses a virtual environment's individual assets as security tokens. To improve the token selection process, we introduce the HOG interaction technique. HOG combines two classic interaction techniques, Hook and Go-Go, and improves approximate object targeting and further obfuscation of user password token selections. We created an engaging mystery-solving mini-game to demonstrate our authentication method and interaction technique.

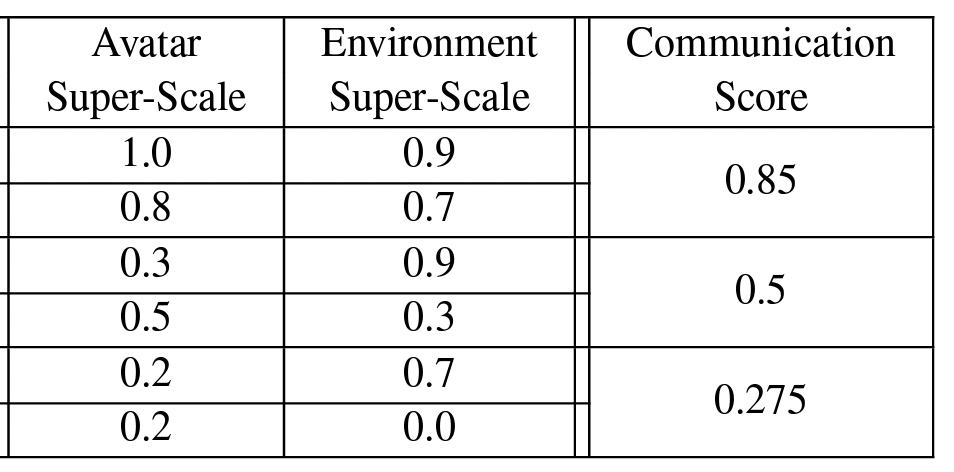

A Communication-Focused Framework for Understanding Immersive Collaboration Experiences

The ability to collaborate with other people across barriers created by time and/or space is one of the greatest features of modern communication. Immersive technologies are positioned to enhance this ability to collaborate even further. However, we do not have a firm understanding of how specific immersive technologies, or components thereof, alter the ability for two or more people to communicate, and hence collaborate. In this work-in-progress position paper, we propose a new framework for immersive collaboration experiences and provide an example of how it could be used to understand a hybrid collaboration among two co-located users and one remote user. We are seeking feedback from the community before conducting a formal evaluation of the framework. We also present some future work that this framework could facilitate.

Clean the Ocean: An Immersive VR Experience Proposing New Modifications to Go-Go and WiM Techniques

In this paper we present our solution to the 2022 3DUI Contest challenge. We aim to provide an immersive VR experience to increase player's awareness of trash pollution in the ocean while improving the current interaction techniques in virtual environments. To achieve these objectives, we adapted two classic interaction techniques, Go-Go and World in Miniature (WiM), to provide an engaging minigame in which the user collects the trash in the ocean. To improve the precision and address occlusion issues in the traditional Go-Go technique we propose ReX Go-Go. We also propose an adaptation to WiM, referred to as Rabbit-Out-of-the-Hat to allow an exocentric interaction for easier object retrieval interaction.